Some information what we do with the RGB image we get from the Kinect camera and how we are detecting blobs.

1. We get one image of the camera

2. Background Substraction

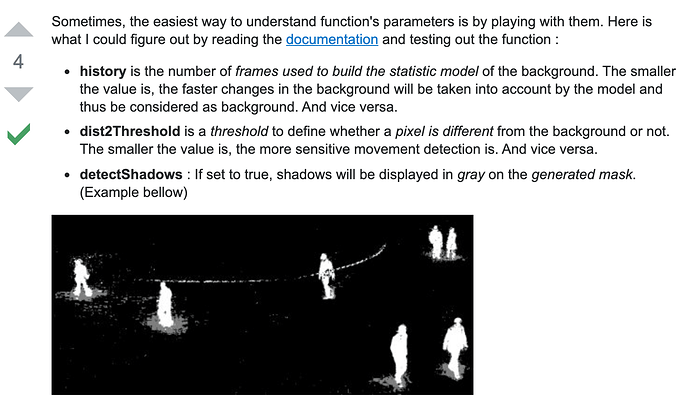

To only get the moving parts of an image we do a background substraction.

This is like green screening. We only want to get out the interesting information of the image.

https://opencv-python-tutroals.readthedocs.io/en/latest/py_tutorials/py_video/py_bg_subtraction/py_bg_subtraction.html

We use the module cv2.createBackgroundSubtractorKNN() we could also use cv2.createBackgroundSubtractorMOG2().

The Standar paramenters of KNN are this

cv2.createBackgroundSubtractorKNN(history=500, dist2Threshold=400.0, bool detectShadows=true)

There is another Parameters that we can set which is the Learning Rate. We have the option to chose -1 for auto, 0 background model is not updated at all, 1 means that the background model is completely reinitialized from the last frame.

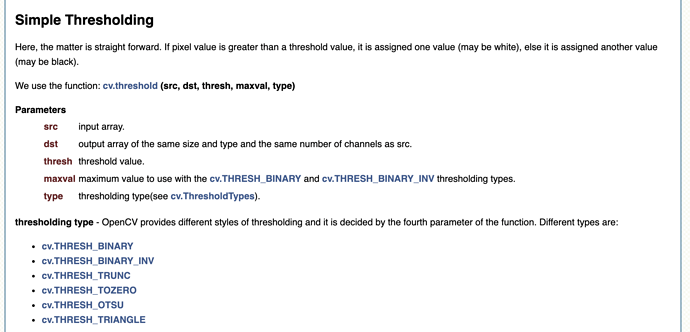

3. Image Thresholding

We do this thresholding to get rid of any colors that are too close to the background color black. It seems there are only some grey pixel left after the background subtraction if we leave shadows on. If we disable shadows I think we do not need this threshold. Everything lighter then 127 will be set to 255 (white) everything below 127 will be set 0 (background).

cv2.threshold(src, thresh, maxval, type[, dst])

cv2.threshold(fgMask,127,255,cv2.THRESH_BINARY)

This is a great website to test the threshold

—> https://docs.opencv.org/master/d7/dd0/tutorial_js_thresholding.html

https://docs.opencv.org/2.4/doc/tutorials/imgproc/threshold/threshold.html

4. Then we use some Morphological Transformations

The transformations need two inputs. One is the original image, the second one is called structuring element or kernel which decides the nature of operation.

The kernel slides throught the images and

kernel = np.ones((3,3), np.uint8)

- erode: erodes away the boundaries of a forground image, takes cares of our white noises (we do not use it in the end because the method connectedcomponetswithstats() does not like it.

- dilate

- morphological ex

https://docs.opencv.org/trunk/d9/d61/tutorial_py_morphological_ops.html

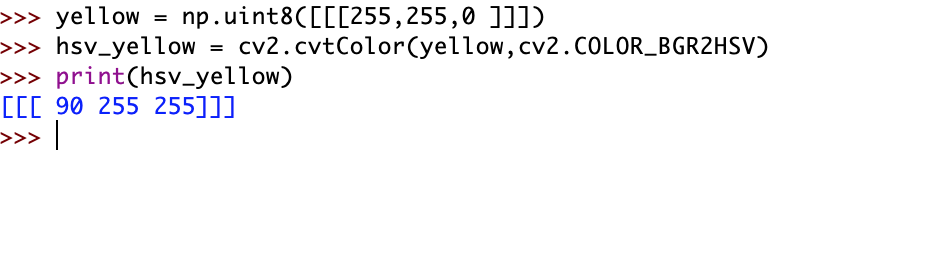

- Greating a Colorspace in BGR

We add color so we can draw some colored boxes around the blobs later.

We change the colorspace from Grayscale to BGR and assigned it to the variable col.

BGR is the colorformat that is used in OpenCV.

cv2.cvtColor(fgMask, cv2.COLOR_GRAY2BGR)

https://opencv-python-tutroals.readthedocs.io/en/latest/py_tutorials/py_imgproc/py_colorspaces/py_colorspaces.html

4. Blog detection

With cv2.connectedComponentsWithStats() we then detect the position and size of the blobs. An alternative would be cv2.findcontours() but this only gives us the points of the line around he blobs and we would need to calculate the size and the position by hand.

There is also the cv2.SimpleBlobDetector() this also works also with our opencv version (3.2.0) we have on the pi. With this method we will try to do a script that triggers the relay if a blob is at a certain size .

https://docs.opencv.org/3.4/d0/d7a/classcv_1_1SimpleBlobDetector.html

https://docs.opencv.org/master/d5/daf/tutorial_py_histogram_equalization.html